AI Inference Optimization Framework

The AI Optimization Engine

AI Inference Optimization Framework

Make your AI models

Pruna AI is the AI Optimization Inference Framework for ML teams seeking efficiency and productivity gains.

Make your AI models

Pruna AI is the AI Optimization Engine for ML teams seeking to simplify scalable inference.

Make your

AI models

Pruna AI is the AI Optimization Engine for ML teams seeking to simplify scalable inference.

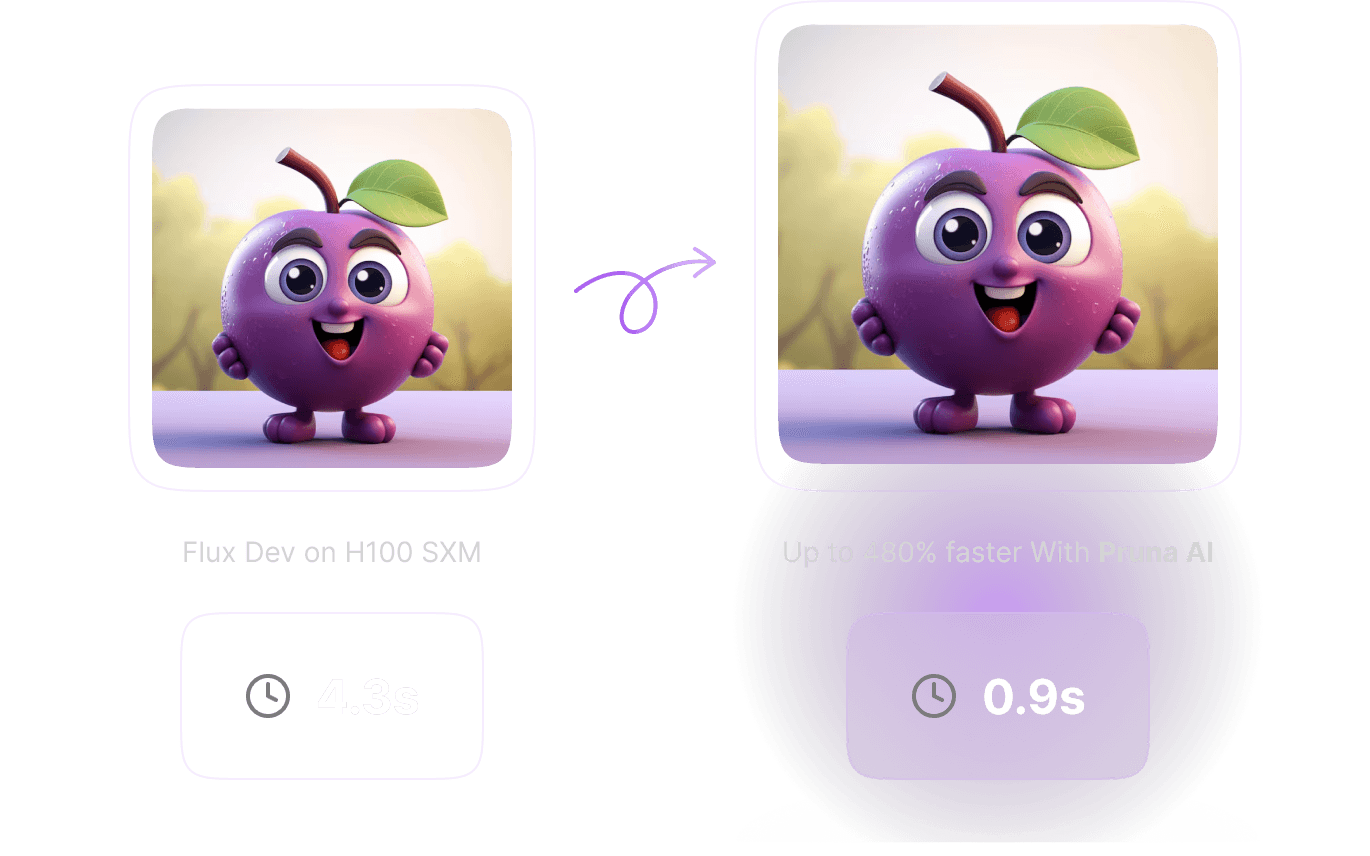

Flux Dev on H100 SXM

4.3s

Up to 480% faster With Pruna AI

0.9s

Our Customers

Our Customers

Our Customers

Combines compression algorithms for AI models

Only a few lines of code to automatically adapt and combine the best machine learning efficiency and compression methods for your use-case.

Open-source

Works with any AI model

Combines all optimization algorithms

Supports all serving platforms

Combines compression algorithms for AI models

Only a few lines of code to automatically adapt and combine the best machine learning efficiency and compression methods for your use-case.

Open-source

Works with any AI model

Combines all optimization algorithms

Supports all serving platforms

Combines compression algorithms for AI models

Only a few lines of code to automatically adapt and combine the best machine learning efficiency and compression methods for your use-case.

Open-source

Works with any AI model

Combines all optimization algorithms

Supports all serving platforms

Run Flux 5x faster, 5x cheaper

We tested various optimization combinations for Flux on both 512 and 1024 sizes, with over 60 prompts. Pruna is made for every use cases:

Reach sub 60ms per step

Ready to use with LoRAs

Quality evaluation metrics integrated

Lossless speed-up with ComfyUI

Run Flux 5x faster, 5x cheaper

We tested various optimization combinations for Flux on both 512 and 1024 sizes, with over 60 prompts. Pruna is made for every use cases:

Reach sub 60ms per step

Ready to use with LoRAs

Quality evaluation metrics integrated

Lossless speed-up with ComfyUI

Run Flux 5x faster, 5x cheaper

We tested various optimization combinations for Flux on both 512 and 1024 sizes, with over 60 prompts. Pruna is made for every use cases:

Reach sub 60ms per step

Ready to use with LoRAs

Quality evaluation metrics integrated

Lossless speed-up with ComfyUI

Compatible with ComfyUI, and more!

Our framework is also compatible with various cloud and serving platforms, ensuring flexibility whether you're running models locally or scaling in the cloud.

TritonServer

ComfyUI

SageMaker

Replicate

Compatible with ComfyUI, and more!

Our framework is also compatible with various cloud and serving platforms, ensuring flexibility whether you're running models locally or scaling in the cloud.

TritonServer

ComfyUI

SageMaker

Replicate

Compatible with ComfyUI, and more!

Our framework is also compatible with various cloud and serving platforms, ensuring flexibility whether you're running models locally or scaling in the cloud.

TritonServer

ComfyUI

SageMaker

Replicate

Speed Up Your Models With Pruna AI.

Inefficient models drive up costs, slow down your productivity and increase carbon emissions. Make your AI more accessible and sustainable with Pruna AI.

pip install pruna

Copied

Speed Up Your Models With Pruna AI

Inefficient models drive up costs, slow down your productivity and increase carbon emissions. Make your AI more accessible and sustainable with Pruna AI.

pip install pruna

Copied

Speed Up Your Models With Pruna AI.

Inefficient models drive up costs, slow down your productivity and increase carbon emissions. Make your AI more accessible and sustainable with Pruna AI.

pip install pruna

Copied

© 2025 Pruna AI - Built with Pretzels & Croissants 🥨 🥐

© 2025 Pruna AI - Built with Pretzels & Croissants 🥨 🥐

© 2025 Pruna AI - Built with Pretzels & Croissants